Learning Rates on Accuracy & Loss in Multilayer Perceptron Neural Networks

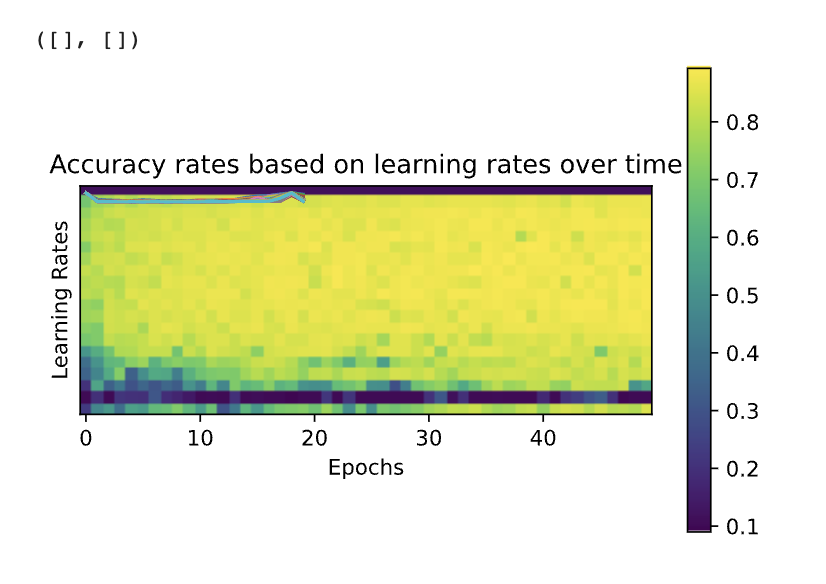

I explored how modifying the learning rate of a multilayer perceptron neural network affects the accuracy and loss function of said network. The learning rate is an important hyperparameter because it controls how rapidly a model changes its parameters to adapt to a given constraint. If the learning rate is too small, the model may not adapt sufficiently between each iteration and will require many more epochs to run. If it is too large, it may overcorrect and never properly adjust the various parameters of the network. I built a series of networks using PyTorch and tested them on the D2l Fashion MNIST Dataset.